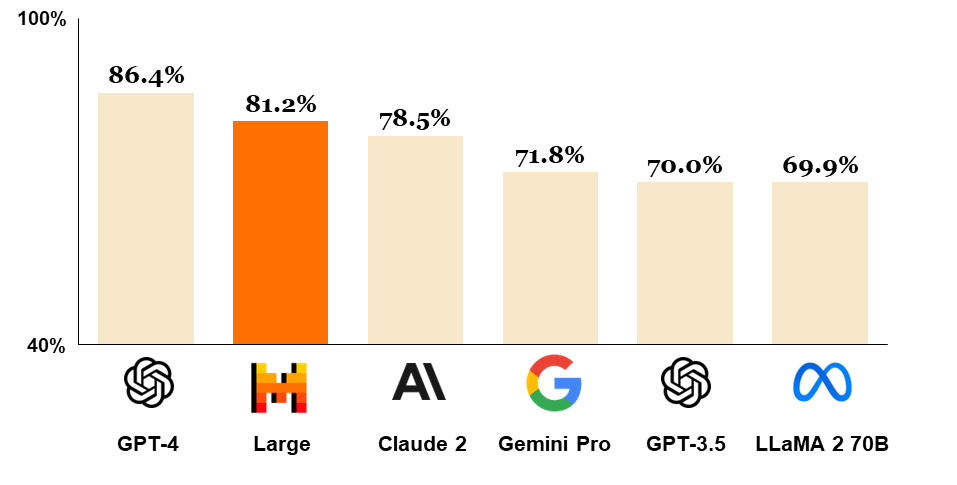

Figure 1: Comparison of GPT-4, Mistral Large (pre-trained), Claude 2, Gemini Pro 1.0, GPT 3.5 and LLaMA 2 70B on MMLU (Measuring massive multitask language understanding).

(IN BRIEF) Mistral AI, an AI company, has introduced Mistral Large, its latest advanced language model, offering top-tier reasoning capabilities and multilingual proficiency. It’s now accessible through Azure, in addition to their platform. They’ve also launched Mistral Small, optimized for low latency tasks. Both models support JSON format and function calling. This move aims to democratize AI by making cutting-edge models readily available and user-friendly.

(PRESS RELEASE) PARIS, 26-Feb-2023 — /EuropaWire/ — Mistral AI, a French AI startup, is proud to unveil Mistral Large, our latest breakthrough in language model technology. Boasting unparalleled reasoning capabilities, Mistral Large sets a new standard for text generation and comprehension. Available through la Plateforme and now accessible via Azure, Mistral Large marks a significant milestone in our mission to democratize cutting-edge AI.

Key Features of Mistral Large:

- State-of-the-Art Reasoning: Mistral Large excels in complex multilingual reasoning tasks, from text understanding to code generation, delivering exceptional performance on industry-standard benchmarks.

- Multilingual Proficiency: Fluent in English, French, Spanish, German, and Italian, Mistral Large offers nuanced language understanding and cultural context sensitivity.

- Extended Context Window: With a 32K tokens context window, Mistral Large enables precise information recall from large documents, enhancing its utility for diverse applications.

- Precise Instruction-Following: Developers can tailor moderation policies with Mistral Large’s precise instruction-following capability, ensuring robust system-level moderation.

Partnering with Microsoft Azure:

In line with our commitment to widespread AI accessibility, Mistral is pleased to announce our collaboration with Microsoft Azure. Now, developers can seamlessly access Mistral Large through Azure AI Studio and Azure Machine Learning, unlocking powerful AI capabilities with ease. Beta customers have used it with significant success. Our models can be deployed on your environment for the most sensitive use cases with access to our model weights; Read success stories on this kind of deployment, and contact our team for further details.

Mistral Large capacities

We compare Mistral Large’s performance to the top-leading LLM models on commonly used benchmarks.

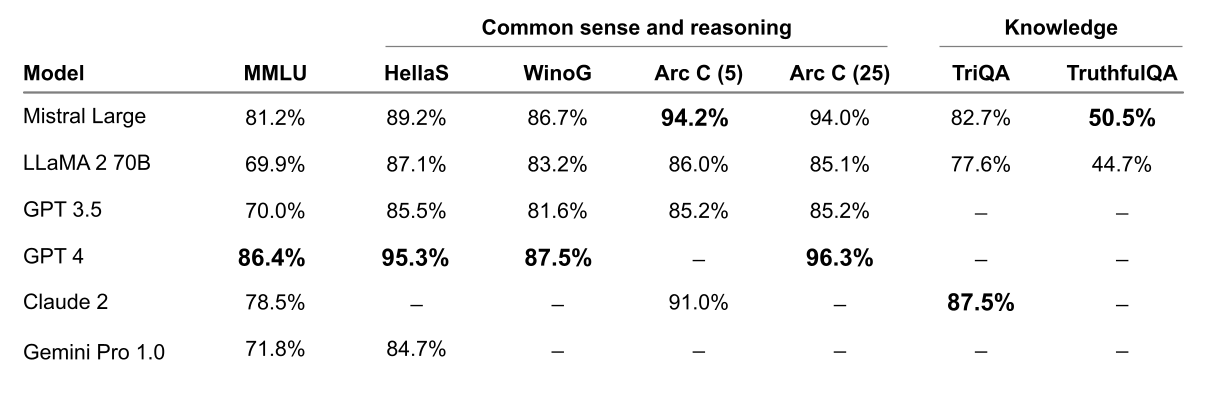

Reasoning and knowledge

Mistral Large shows powerful reasoning capabilities. In the following figure, we report the performance of the pretrained models on standard benchmarks.

Figure 2: Performance on widespread common sense, reasoning and knowledge benchmarks of the top-leading LLM models on the market: MMLU (Measuring massive multitask language in understanding), HellaSwag (10-shot), Wino Grande (5-shot), Arc Challenge (5-shot), Arc Challenge (25-shot), TriviaQA (5-shot) and TruthfulQA.

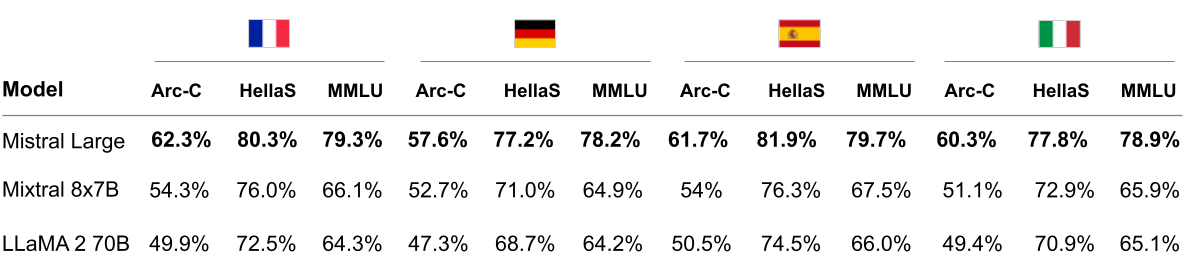

Multi-lingual capacities

Mistral Large has native multi-lingual capacities. It strongly outperforms LLaMA 2 70B on HellaSwag, Arc Challenge and MMLU benchmarks in French, German, Spanish and Italian.

Figure 3: Comparison of Mistral Large, Mixtral 8x7B and LLaMA 2 70B on HellaSwag, Arc Challenge and MMLU in French, German, Spanish and Italian.

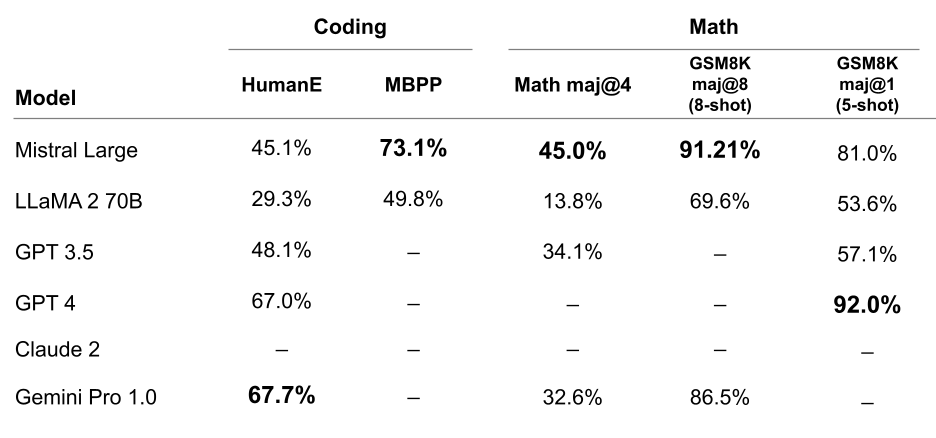

Maths & Coding

Mistral Large shows top performance in coding and math tasks. In the table below, we report the performance across a suite of popular benchmarks to evaluate the coding and math performance for some of the top-leading LLM models.

Figure 4: Performance on popular coding and math benchmarks of the leading LLM models on the market: HumanEval pass@1, MBPP pass@1, Math maj@4, GSM8K maj@8 (8-shot) and GSM8K maj@1 (5 shot).

Introducing Mistral Small:

Complementing Mistral Large, we’re also launching Mistral Small, an optimized model designed for low latency workloads. Offering superior performance and reduced latency compared to previous models, Mistral Small bridges the gap between our open-weight offerings and our flagship model.

Enhanced Endpoint Offering:

To streamline accessibility, we’re simplifying our endpoint offerings, providing open-weight endpoints with competitive pricing alongside new optimized model endpoints. Our benchmarks offer comprehensive insights into performance and cost tradeoffs, empowering organizations to make informed decisions.

Alongside Mistral Large, we’re releasing a new optimised model, Mistral Small, optimised for latency and cost. Mistral Small outperforms Mixtral 8x7B and has lower latency, which makes it a refined intermediary solution between our open-weight offering and our flagship model.

Mistral Small benefits from the same innovation as Mistral Large regarding RAG-enablement and function calling.

We’re simplifying our endpoint offering to provide the following:

- Open-weight endpoints with competitive pricing. This comprises

open-mistral-7Bandopen-mixtral-8x7b. - New optimised model endpoints,

mistral-small-2402andmistral-large-2402. We’re maintainingmistral-medium, which we are not updating today.

Our benchmarks give a comprehensive view of performance/cost tradeoffs.

JSON Format and Function Calling:

Mistral Small and Mistral Large now support JSON format mode and function calling, enabling seamless integration with developers’ workflows. This functionality enhances interaction with our models, facilitating structured data extraction and complex interactions with internal systems.

Function calling lets developers interface Mistral endpoints with a set of their own tools, enabling more complex interactions with internal code, APIs or databases. You will learn more in our function calling guide.

Function calling and JSON format are only available on mistral-small and mistral-large. We will be adding formatting to all endpoints shortly, as well as enabling more fine-grained format definitions.

Experience Mistral Today:

Mistral Large is now available on la Plateforme and Azure, with Mistral Small offering optimized performance for latency-sensitive applications. Mistral Large is also exposed on our beta assistant demonstrator, le Chat. Try Mistral today and join us in shaping the future of AI innovation. We value your feedback as we continue to push the boundaries of language model technology.

Media contact:

contact@mistral.ai

SOURCE: Mistral AI

- Astor Asset Management 3 Ltd: Salinas Pliego Incumple Préstamo de $110 Millones USD y Viola Regulaciones Mexicanas

- Astor Asset Management 3 Ltd: Salinas Pliego Verstößt gegen Darlehensvertrag über 110 Mio. USD und Mexikanische Wertpapiergesetze

- ChargeEuropa zamyka rundę finansowania, której przewodził fundusz Shift4Good tym samym dokonując historycznej francuskiej inwestycji w polski sektor elektromobilności

- Strengthening EU Protections: Robert Szustkowski calls for safeguarding EU citizens’ rights to dignity

- Digi Communications NV announces the release of H1 2024 Financial Results

- Digi Communications N.V. announces that conditional stock options were granted to a director of the Company’s Romanian Subsidiary

- Digi Communications N.V. announces Investors Call for the presentation of the H1 2024 Financial Results

- Digi Communications N.V. announces the conclusion of a share purchase agreement by its subsidiary in Portugal

- Digi Communications N.V. Announces Rating Assigned by Fitch Ratings to Digi Communications N.V.

- Digi Communications N.V. announces significant agreements concluded by the Company’s subsidiaries in Spain

- SGW Global Appoints Telcomdis as the Official European Distributor for Motorola Nursery and Motorola Sound Products

- Digi Communications N.V. announces the availability of the instruction regarding the payment of share dividend for the 2023 financial year

- Digi Communications N.V. announces the exercise of conditional share options by the executive directors of the Company, for the year 2023, as approved by the Company’s Ordinary General Shareholders’ Meetings from 18th May 2021 and 28th December 2022

- Digi Communications N.V. announces the granting of conditional stock options to Executive Directors of the Company based on the general shareholders’ meeting approval from 25 June 2024

- Digi Communications N.V. announces the OGMS resolutions and the availability of the approved 2023 Annual Report

- Czech Composer Tatiana Mikova Presents Her String Quartet ‘In Modo Lidico’ at Carnegie Hall

- SWIFTT: A Copernicus-based forest management tool to map, mitigate, and prevent the main threats to EU forests

- WickedBet Unveils Exciting Euro 2024 Promotion with Boosted Odds

- Museum of Unrest: a new space for activism, art and design

- Digi Communications N.V. announces the conclusion of a Senior Facility Agreement by companies within Digi Group

- Digi Communications N.V. announces the agreements concluded by Digi Romania (formerly named RCS & RDS S.A.), the Romanian subsidiary of the Company

- Green Light for Henri Hotel, Restaurants and Shops in the “Alter Fischereihafen” (Old Fishing Port) in Cuxhaven, opening Summer 2026

- Digi Communications N.V. reports consolidated revenues and other income of EUR 447 million, adjusted EBITDA (excluding IFRS 16) of EUR 140 million for Q1 2024

- Digi Communications announces the conclusion of Facilities Agreements by companies from Digi Group

- Digi Communications N.V. Announces the convocation of the Company’s general shareholders meeting for 25 June 2024 for the approval of, among others, the 2023 Annual Report

- Digi Communications NV announces Investors Call for the presentation of the Q1 2024 Financial Results

- Digi Communications intends to propose to shareholders the distribution of dividends for the fiscal year 2023 at the upcoming General Meeting of Shareholders, which shall take place in June 2024

- Digi Communications N.V. announces the availability of the Romanian version of the 2023 Annual Report

- Digi Communications N.V. announces the availability of the 2023 Annual Report

- International Airlines Group adopts Airline Economics by Skailark ↗️

- BevZero Spain Enhances Sustainability Efforts with Installation of Solar Panels at Production Facility

- Digi Communications N.V. announces share transaction made by an Executive Director of the Company with class B shares

- BevZero South Africa Achieves FSSC 22000 Food Safety Certification

- Digi Communications N.V.: Digi Spain Enters Agreement to Sell FTTH Network to International Investors for Up to EUR 750 Million

- Patients as Partners® Europe Announces the Launch of 8th Annual Meeting with 2024 Keynotes and Topics

- driveMybox continues its international expansion: Hungary as a new strategic location

- Monesave introduces Socialised budgeting: Meet the app quietly revolutionising how users budget

- Digi Communications NV announces the release of the 2023 Preliminary Financial Results

- Digi Communications NV announces Investors Call for the presentation of the 2023 Preliminary Financial Results

- Lensa, един от най-ценените търговци на оптика в Румъния, пристига в България. Първият шоурум е открит в София

- Criando o futuro: desenvolvimento da AENO no mercado de consumo em Portugal

- Digi Communications N.V. Announces the release of the Financial Calendar for 2024

- Customer Data Platform Industry Attracts New Participants: CDP Institute Report

- eCarsTrade annonce Dirk Van Roost au poste de Directeur Administratif et Financier: une décision stratégique pour la croissance à venir

- BevZero Announces Strategic Partnership with TOMSA Desil to Distribute equipment for sustainability in the wine industry, as well as the development of Next-Gen Dealcoholization technology

- Digi Communications N.V. announces share transaction made by a Non-Executive Director of the Company with class B shares

- Digi Spain Telecom, the subsidiary of Digi Communications NV in Spain, has concluded a spectrum transfer agreement for the purchase of spectrum licenses

- Эксперт по торговле акциями Сергей Левин запускает онлайн-мастер-класс по торговле сырьевыми товарами и хеджированию

- Digi Communications N.V. announces the conclusion by Company’s Portuguese subsidiary of a framework agreement for spectrum usage rights

- North Texas Couple Completes Dream Purchase of Ouray’s Iconic Beaumont Hotel

- Предприниматель и филантроп Михаил Пелег подчеркнул важность саммита ООН по Целям устойчивого развития 2023 года в Нью-Йорке

- Digi Communications NV announces the release of the Q3 2023 Financial Results

- IQ Biozoom Innovates Non-Invasive Self-Testing, Empowering People to Self-Monitor with Laboratory Precision at Home

- BevZero Introduces Energy Saving Tank Insulation System to Europe under name “BevClad”

- Motorvision Group reduces localization costs using AI dubbing thanks to partnering with Dubformer

- Digi Communications NV Announces Investors Call for the Q3 2023 Financial Results

- Jifiti Granted Electronic Money Institution (EMI) License in Europe

- Предприниматель Михаил Пелег выступил в защиту образования и грамотности на мероприятии ЮНЕСКО, посвящённом Международному дню грамотности

- VRG Components Welcomes New Austrian Independent Agent

- Digi Communications N.V. announces that Digi Spain Telecom S.L.U., its subsidiary in Spain, and abrdn plc have completed the first investment within the transaction having as subject matter the financing of the roll out of a Fibre-to-the-Home (“FTTH”) network in Andalusia, Spain

- Продюсер Михаил Пелег, как сообщается, работает над новым сериалом с участием крупной голливудской актрисы

- Double digit growth in global hospitality industry for Q4 2023

- ITC Deploys Traffic Management Solution in Peachtree Corners, Launches into United States Market

- Cyviz onthult nieuwe TEMPEST dynamische controlekamer in Benelux, Nederland

- EU-Funded CommuniCity Launches its Second Open Call

- Astrologia pode dar pistas sobre a separação de Sophie Turner e Joe Jonas

- La astrología puede señalar las razones de la separación de Sophie Turner y Joe Jonas

- Empowering Europe against infectious diseases: innovative framework to tackle climate-driven health risks

- Montachem International Enters Compostable Materials Market with Seaweed Resins Company Loliware

- Digi Communications N.V. announces that its Belgian affiliated companies are moving ahead with their operations

- Digi Communications N.V. announces the exercise of conditional share options by an executive director of the Company, for the year 2022, as approved by the Company’s Ordinary General Shareholders’ Meeting from 18 May 2021

- Digi Communications N.V. announces the availability of the instruction regarding the payment of share dividend for the 2022 financial year

- Digi Communications N.V. announces the availability of the 2022 Annual Report

- Digi Communications N.V. announces the general shareholders’ meeting resolutions from 18 August 2023 approving amongst others, the 2022 Annual Accounts

- Русские эмигранты усиливают призывы «Я хочу, чтобы вы жили» через искусство

- BevZero Introduces State-of-the-Art Mobile Flash Pasteurization Unit to Enhance Non-Alcoholic Beverage Stability at South Africa Facility

- Russian Emigrés Amplify Pleas of “I Want You to Live” through Art

- Digi Communications NV announces the release of H1 2023 Financial Results

- Digi Communications NV Announces Investors Call for the H1 2023 Financial Results

- Digi Communications N.V. announces the convocation of the Company’s general shareholders meeting for 18 August 2023 for the approval of, among others, the 2022 Annual Report

- “Art Is Our Weapon”: Artists in Exile Deploy Their Talents in Support of Peace, Justice for Ukraine

- Digi Communications N.V. announces the availability of the 2022 Annual Financial Report

- “AmsEindShuttle” nuevo servicio de transporte que conecta el aeropuerto de Eindhoven y Ámsterdam

- Un nuovo servizio navetta “AmsEindShuttle” collega l’aeroporto di Eindhoven ad Amsterdam

- Digi Communications N.V. announces the conclusion of an amendment agreement to the Facility Agreement dated 26 July 2021, by the Company’s Spanish subsidiary

- Digi Communications N.V. announces an amendment of the Company’s 2023 financial calendar

- iGulu F1: Brewing Evolution Unleashed

- Почему интерактивная «Карта мира» собрала ключевые антивоенные сообщества россиян по всему миру и становится для них важнейшим инструментом

- Hajj Minister meets EU ambassadors to Saudi Arabia

- Online Organizing Platform “Map of Peace” Emerges as Key Tool for Diaspora Activists

- Digi Communications N.V. announces that conditional stock options were granted to executive directors of the Company based on the general shareholders’ meeting approval from 18 May 2021

- Digi Communications N.V. announces the release of the Q1 2023 financial results

- AMBROSIA – A MULTIPLEXED PLASMO-PHOTONIC BIOSENSING PLATFORM FOR RAPID AND INTELLIGENT SEPSIS DIAGNOSIS AT THE POINT-OF-CARE

- Digi Communications NV announces Investors Call for the Q1 2023 Financial Results presentation

- Digi Communications N.V. announces the amendment of the Company’s 2023 financial calendar

- Digi Communications N.V. announces the conclusion of two Facilities Agreements by the Company’s Romanian subsidiary

- Digi Communications N.V. announces the conclusion of a Senior Facility Agreement by the Company’s Romanian subsidiary

- Patients as Partners Europe Returns to London and Announces Agenda Highlights

- GRETE PROJECT RESULTS PRESENTED TO TEXTILE INDUSTRY STAKEHOLDERS AT INTERNATIONAL CELLULOSE FIBRES CONFERENCE

- Digi Communications N.V. announces Digi Spain Telecom S.L.U., its subsidiary in Spain, entered into an investment agreement with abrdn to finance the roll out of a Fibre-to-the-Home (FTTH) network in Andalusia, Spain

- XSpline SPA / University of Linz (Austria): the first patient has been enrolled in the international multicenter clinical study for the Cardiac Resynchronization Therapy DeliveRy guided by non-Invasive electrical and VEnous anatomy assessment (CRT-DRIVE)

- Franklin Junction Expands Host Kitchen® Network To Europe with Digital Food Hall Pioneer Casper

- Unihertz a dévoilé un nouveau smartphone distinctif, Luna, au MWC 2023 de Barcelone

- Unihertz Brachte ein Neues, Markantes Smartphone, Luna, auf dem MWC 2023 in Barcelona

- Editor's pick archive....